Code

We develop and maintain several analysis frameworks for neuroscience and biology more generally:

Cellpose is a generalist, deep learning-based segmentation algorithm written in Python which can precisely segment cells from a wide range of image types – try it out on your own data easily on our website: https://www.cellpose.org. Cellpose can be applied to 2D and 3D imaging data without requiring 3D-labelled data. It has an easy-to-use graphical user interface for manual labeling and for curation of the automated results. It can also be used to train new models on user data, and for denoising, deblurring, or upsampling images. Software developers have integrated Cellpose into their own image processing software, such as CellProfiler, ImagePy, ImJoy, aPeer, Napari.

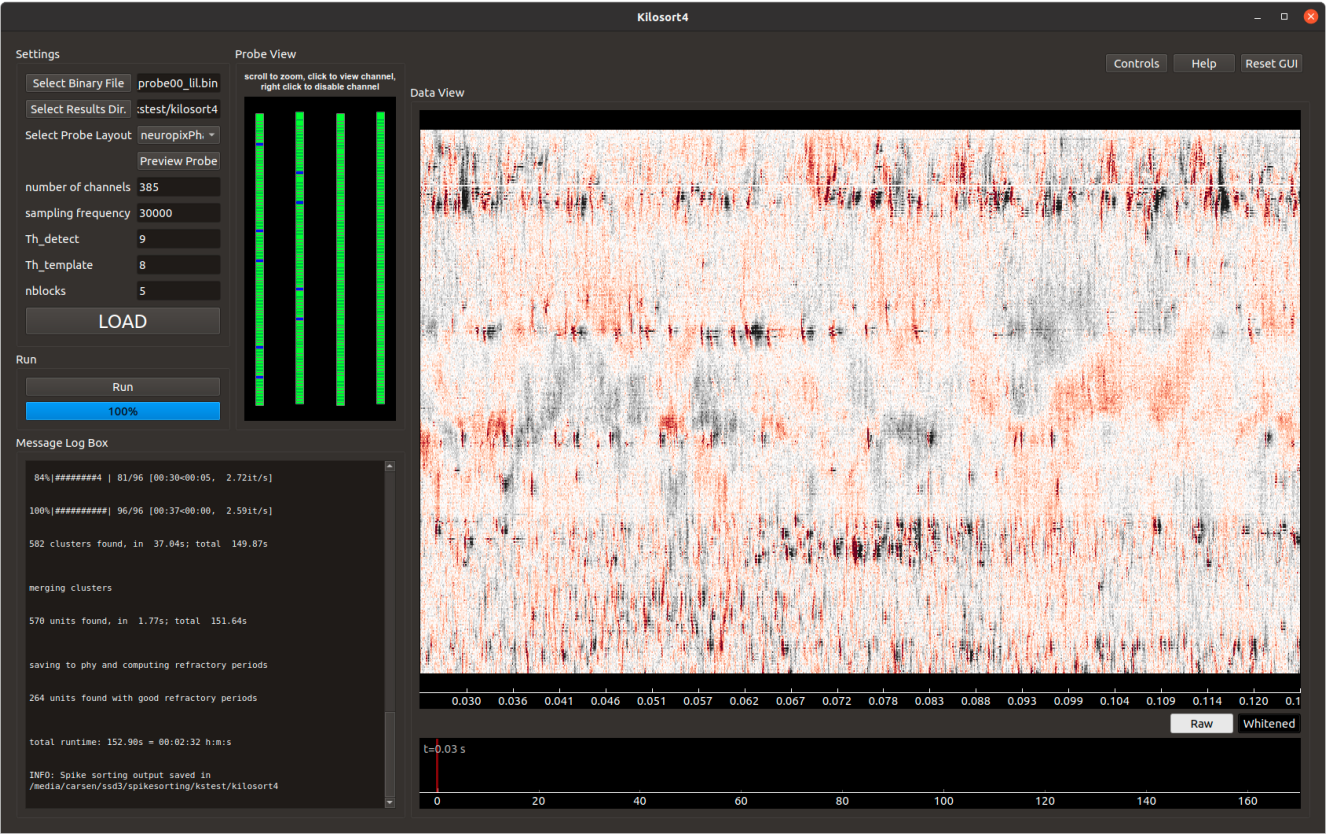

Kilosort is the most popular spike sorting tool for Neuropixels probes, and it also works on other probes. The software is GPU-accelerated to enable fast and accurate spike sorting. Kilosort first detects spikes, then uses these spikes to perform drift correction – an important step in most recording setups. Next it clusters the spikes using novel graph-based clustering techniques. Kilosort4 is implemented in python with an easy-to-use GUI.

Suite2p is a fast, accurate and complete pipeline written in Python that registers raw movies, detects active cells, extracts their calcium traces and infers their spike times. Suite2p runs on standard workstations, operates faster than real time, and recovers ~2 times more cells than the previous state-of-the-art methods. Its low computational load allows routine detection of ~25,000 cells simultaneously from recordings taken with standard two-photon resonant-scanning microscopes. In addition to its ability to detect cell somas, the detection algorithm can detect axonal segments, boutons, dendrites, and spines. Suite2p has an extensive GUI which allows the user to explore their data. Software developers have integrated Suite2p into their packages, such as those for multi-day cell alignment and photostimulation experiments.

Facemap is a tool for extracting behavioral features from mouse face videos and using them to predict neural activity. Facemap can be used to extract a 500-dimensional summary of the motor actions visible on the mouse’s face by applying singular value decomposition (SVD) to the facial motion. It can also be used to track keypoints on the mouse face, using a state-of-the-art deep neural network. Facemap also includes a 1D convolutional neural network to predict neural activity from keypoints or SVDs, which is two times more accurate than previous approaches. Facemap’s GUI enables movie playback with behavioral feature tracking and neural activity.

Rastermap is a tool for visualizing large-scale neural activity by applying a one-dimensional manifold embedding. To preserve both local and global structure, Rastermap combines manifold discovery and clustering. To capture temporal relationships among clusters, we compute not just the instantaneous correlations between cluster activities but also the cross-correlations of the clusters. Next we sort these clusters to optimize local and global distance preservation. Then the sorting is upsampled so that neurons can be assigned to their most correlated place in the one-dimensional embedding. This enables Rastermap to find sequences in visual cortical neural activity evoked by virtual reality corridors, which t-SNE and UMAP cannot do, and also Rastermap outperforms these algorithms on structure preservation benchmarks. Rastermap can be run in a jupyter-notebook, on the command line, in the provided GUI, or inside Suite2p to explore the spatial relationships among neurons identified to have similar activity patterns.

Datasets

We share our large-scale recordings of mouse cortex on figshare:

Visual response dataset (Du et al 2025): recordings of 29,000 neurons in mouse primary visual cortex in response to up to 65,000 natural images; analysis code

Visual learning dataset (Zhong et al 2025): recordings of 50,000+ neurons simultaneously in mouse visual cortex as mice undergo unsupervised and task learning in virtual reality; analysis code

Facemap dataset (Syeda et al 2024): spontaneous neural activity from 50,000+ neurons in mouse visual cortex and sensorimotor cortex, simultaneous face camera recordings, and keypoint tracking training set

Neural responses to oriented stimuli (Stringer et al 2021): Responses of 20,000+ neurons in mouse primary visual cortex and higher order visual cortex; analysis code

Spontaneous neural activity in V1 (Stringer, Pachitariu et al 2019): Recordings of 10,000 neurons in visual cortex during spontaneous behaviors; analysis code

Eight-probe Neuropixels recordings during spontaneous behaviors by Nicholas Steinmetz, from Stringer, Pachitariu et al 2019

Neural responses to natural images (Stringer, Pachitariu et al 2019): Recordings of 10,000 neurons in primary visual cortex in response to 2,800 natural images; analysis code

V1 responses to drifting gratings (Pachitariu et al 2018): Responses of 10,000 neurons in mouse V1 during drifting gratings

We also shared the training data for the Cellpose algorithm: 70,000 segmented cells + other objects.

And we shared the simulations from the Kilosort4 paper: ephys simulations.

Powered by Quarto. © Marius Pachitariu & Carsen Stringer lab, 2023.